StackOne connects you to hundreds of providers through one API. But things go wrong - expired keys, missing scopes, unsupported actions—and we surface the provider's raw error. Problem is, a Workday auth error referencing Workday-specific concepts doesn't help much in our dashboard.

I'm Will Leeney, AI Engineer at StackOne. I’ve completed a PhD studying best practices for evals and evaluating under randomness. My first month at StackOne was spent building an AI agent that translates these provider errors into clear resolution steps. Here’s that story. The sauce? Starting with evals from day one.

Before writing any code, I interviewed Bryce, one of our solutions engineer, to understand the manual process of resolving errors. Users encounter errors in three places: account linking, account status, and connection logs. Each error type has documented solutions scattered across our guides. The goal was simple: automatically search our docs and generate resolution steps, saving Bryce's time for building integrations.

I resisted the urge to build a complex RAG system with time-series analysis and previous resolution patterns. Instead, I sketched the simplest possible flow, see Figure 1. Ship first, improve later.

Figure 1: A rough sketch of what the agent will do to generate the resolution steps.

%20(5).png)

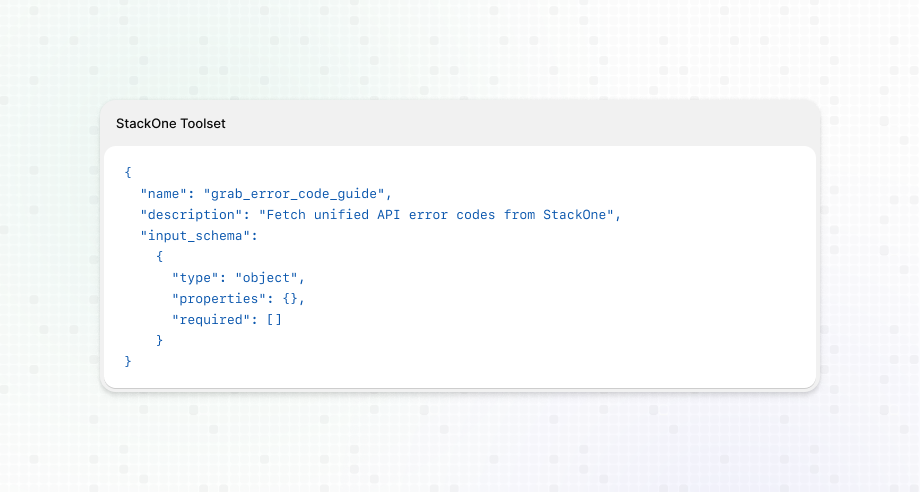

The core of the system is Claude with custom tools that search our documentation. Here's what the tool registration looks like:

{

"name": "grab_error_code_guide",

"description": "Fetch unified API error codes from StackOne",

"input_schema":

{

"type": "object",

"properties": {},

"required": []

}

}One thing that massively sped up development: all our docs are exposed as plain .txt and .md files at docs.stackone.com/llms.txt. No scraping, no complex parsing—just grab the text and go. This turned what could've been days of data pipeline work into a simple fetch request.

I built five tools:

grab_error_code_guide: Fetches our error code referencegrab_troubleshooting_guide : fetches our troubleshooting guidesearch_stackone_docs : Search all of our docssearch_provider_guides: Searches our provider-specific documentationsearch_docs: Vector search across all documentation using Turbopuffer (a vector database)The trickiest part was extracting the right context from messy logs. I used Pydantic for structured outputs with gpt-4o-mini to pull the exact fields needed for resolution.

%20(2).png)

Here's where most AI features fail: they ship without knowing if they actually work. We took a different approach.

First, I connected the Lambda function to LangSmith for full observability. Then I created real errors in our dev environment and ran them through the resolution agent. In LangSmith, I manually corrected the outputs to create a golden dataset. This became our evaluation benchmark.

Now every prompt change could be measured. Did the new prompt improve resolution quality? The evals told us immediately. No more guessing whether changes helped or hurt.

We realised that customers may want to give feedback on the resolution steps once it was in production. We could make use of the real-world examples by implementing a feedback system which we could translate into a better eval database.

This created a virtuous cycle: ship → collect feedback → improve evals → enhance prompt → ship better version.

%20(4).png)

The frontend integration was straightforward thanks to our design and engineering team. We exposed a single endpoint /ai/resolutions that accepts error logs and returns resolution steps.

The entire feature went from concept to production in one month. Not because we rushed, but because we focused on what mattered: knowing whether it actually worked. Evals aren't an afterthought in AI development - they're the foundation that makes shipping with confidence possible.